Hi again everybody! I’ve decided to combine two blog posts into one: a product review and a new article in my microcontroller programming series. It’s a new concept that I’d like to explore for some of my microcontroller programming articles–concrete examples using actual products. Let me know if you’d like to continue to see posts like this in the future!

Introduction

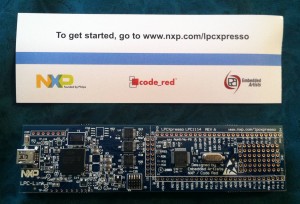

Thanks to Newark/Farnell, I’ve had the opportunity to review several microcontroller development boards over the past year — see the product reviews category on my blog for more of these. Today, I will be reviewing the LPCXpresso OM11049 board. It’s a very simple development board with a built-in USB programming interface. You can plug it into your computer and use Code Red’s free LPCxpresso IDE to write code, compile it, and flash the resulting binary to the microcontroller. The microcontroller portion of the board is bare–it has nothing connected to the pins other than an LED connected to a single GPIO pin. The rest of the pins are brought out to headers. The idea is that you can create whatever circuit you’d like to use on a breadboard, plug the OM11049 board into your breadboard, and go from there. You can also buy a pre-made baseboard with peripherals already attached (1, 2, 3). For the purposes of this review/microcontroller series post, I’m going to stick with only using the OM11049 board by itself, though.

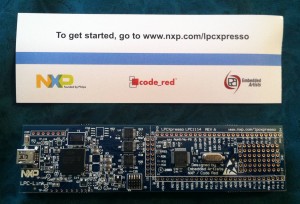

It comes in a plain-Jane envelope (forgive me, Grandma, for that terrible and inaccurate choice of cliché):

Here’s the board! It’s really long, and there’s a good reason for that. I’ll explain why in just a minute…

For those of you following the microcontroller series, you may be wondering where I’m going with this — it seems like a product review so far. What I’m going to do in the process of reviewing this board is walk through setting up a timer and GPIO as a real-world concrete example with code you can try out for yourself. In addition, I’m going to do it with interrupts, so we can see how to use a timer interrupt to keep track of time. This should apply knowledge you have picked up from my GPIO, timer, and interrupt articles. Ready? Let’s go!

The reason the OM11049 board is so long is because it’s actually two boards in one. The first half (on the left in the picture) is an LPC-LINK debugger, which appears to be implemented on an NXP LPC3154 chip. This half of the board is extremely useful because it allows us to program the contents of the microcontroller on the second half of the board without owning an expensive debugger board.

The other, more interesting half of the board is the target side of the board. This half of the board contains the microcontroller we will be programming. It has an NXP LPC1114/302 ARM Cortex-M0 microcontroller. According to the microcontroller’s datasheet, it can run at up to 50 MHz and it has 32 KB of flash memory, 8 KB of SRAM, one UART, one I2C peripheral, one SPI peripheral, 8 ADC channels, and 28 GPIO pins. For those of you who have been following my microcontroller series, you may recognize SPI and GPIO. For now, you can ignore the UART, I2C, and ADC capabilities — I will talk about them in future articles. Anyway, as I just said, this is a Cortex-M0. It’s very similar to the Cortex-M3 that I have mentioned in previous posts. I really like these types of microcontrollers because they’re 32-bit, easy to use, and common enough to find forum postings by plenty of other people using them when you need help.

In the schematic (see page 34) for the OM11049 board, we can see that there is a red LED connected to pin 23 (PIO0_7/CTS) of the microcontroller. We will need to remember this when we start writing some code.

Just a quick sidenote: you may notice that the LED is not the only thing connected to the pin–there is also a 2K resistor in series with the LED. The reason the resistor is there is because LEDs are only designed to have a certain amount of current running through them. If you allow too much current to flow through, you will blow the LED. The purpose of the resistor is to limit the current that can flow through the LED. (For those of you who took the electricity portion of a physics class, you may remember that V=IR, or rearranged, I=V/R. The higher the resistance [R], the lower the current [I].)

So let’s get started and set up the programming environment! By the way, if you don’t have a USB A-to-mini-B cable, you will need one in order to use the board — it does not come with one. However, pretty much everyone has such a cable these days, so I can’t really fault the makers of this board too much for not including one.

Setting up the programming environment

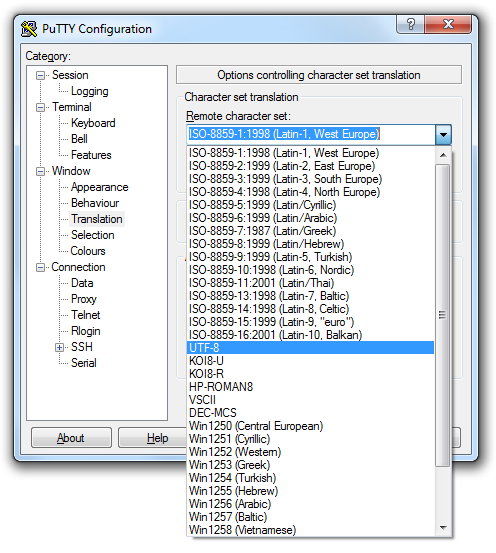

Install the LPCxpresso IDE, open it up, and follow the supplied directions to register it. Got that done? Good! Now let’s move on.

First of all, we need to import the CMSIS library for the LPC1114. In the LPCexpresso window in the bottom left, there should be a section called Start here with choices such as New project and Import project(s). Click Import project(s). Under Project archive (zip) click Browse. I’m not sure exactly where it will default to, but you want to go into the “lpcxpresso/Examples/NXP/LPC1000/LPC11xx” directory and choose “CMSISv2p00_LPC11xx.zip”. This will install the CMSIS libraries version 2.0. Click Next, make sure CMSISv2p00_LPC1xx is checked, and click Finish.

What is CMSIS?

CMSIS stands for “Cortex Microcontroller Software Interface Standard”. It basically provides a set of functions and macros that are common between microcontrollers. Imagine you originally use a Cortex-M0 microcontroller from manufacturer #1, and then you decide to change to a Cortex-M0 made by manufacturer #2. A lot of the features between the two processors will be the same because they share the same microcontroller core. You shouldn’t have to rewrite a bunch of your code that works with the microcontroller core just because the two manufacturers organized their libraries differently. The idea behind CMSIS is to avoid the situation I just described by standardizing on the common functionality so it isn’t implemented differently in the libraries supplied by two different manufacturers. If you use a common peripheral like the SysTick timer (which we will do in this article–more on this later), you can expect it to work exactly the same between all Cortex-M0 processors.

It’s more than just that, though. The CMSIS libraries also include startup code that sets up the processor’s clock rate correctly and other similar things like that. They also tend to standardize how peripherals are accessed–the libraries tend to define a struct (e.g. struct LPC_TIMER) that contains all of the register definitions belonging to a peripheral. It further modularizes each peripheral and makes code easier to read. The bottom line is that CMSIS is a very good idea.

It’s not completely perfect, though. If you do SPI (for example) on a chip by manufacturer #1, chances are the SPI peripheral on the chip by manufacturer #2 has a completely different register layout. There’s nothing you can do about this — the peripherals are just plain different and CMSIS does not do any abstraction at this level. So you still end up having to write different code for that kind of stuff. Anyway, that’s enough about CMSIS. Back to the point of this article.

Continuing on…

Now, you’re ready to create a project for the LPC1114. Click on New project in the same bottom-left pane we used earlier. Click the arrow next to NXP LPC1100 projects and choose C Project. Click Next and give your project a name–I chose LPCXpressoTest. Click Next and choose LPC1114/302 as your target. Click Next once again. In the screen that comes up next, you should notice that the CMSIS project we imported earlier is chosen as the CMSIS library to link against. You’re done–click Finish to create the project.

At this point, you have a simple test project all ready to go. In the Project Explorer on the left, find LPCXpressoTest, and expand it by clicking the arrow next to it. Click the arrow next to the src folder that appears, and you should see cr_startup_lpc11.c and main.c.

cr_startup_lpc11.c is a default provided file that handles all of the startup process. It will load any necessary data from flash into RAM when the microcontroller first starts up, and it also provides default interrupt handlers for all interrupts.

We’re not really interested in this file, though — it’s pretty good without any modifications. The really important file is main.c. This is where we can put our own code. It contains the entry point where code will first start running. We’ll also create extra source files that implement smaller (possibly reusable) pieces of the program, so we don’t end up with a single huge file. But before we start coding…

What is this program going to do?

It’s awfully easy to just sit down and start coding (and I’ve done it many times when getting a new microcontroller board), but since I’m writing a tutorial, I suppose I should have some kind of a goal in mind so you know where we’re going. Here’s what this program will do:

- I want to implement a timer system. Anything can register with the timer and ask to be notified after a specified number of milliseconds have elapsed. Multiple items can be registered at the same time, although we won’t do that in this program.

- Using this timer system, I want the LED on the LPCXpresso board to blink slowly. As the program goes on, the blink rate will increase until it’s extremely fast, and then go slower and slower until it’s back at the original slow blink rate, and repeat the cycle.

Let’s start coding!

Let’s start by thinking about how to split this program up. The timer is its own complete system, so it should probably be completely separated from the rest of the program. That way, I can reuse it in future coding projects. I’m not going to bother creating a separate module for the LED because it’s so simple, although it would honestly be a good idea in a real program to split it up into its own simple module (even if it’s just a header file with some macros). The idea here is to eliminate creating a single huge file that implements everything. It helps separate responsibilities of the program and increase reusability.

timer.h

Let’s start with the timer. We’ll start with the header file. Right-click on the src directory and choose New->Header File. Name it timer.h and click Done. Easy, right? With timer.h open, we’ll now think about what this system of the code needs to do. First of all, it will need an initialization function. It’ll also need a way for other parts of the program to register with the timer system. In order to keep track of everything registered with the timer, we’ll need to define a struct that contains all necessary info to keep track of registrations.

We’re going to store the timer registrations as a linked list. Something registering with the timer will provide a callback function that will be called when the timer is ready. We’ll also allow a caller to provide a pointer to something that will be passed to the callback. This could end up being useful for future applications, but we won’t use it in this program.

Finally, although the timer itself is interrupt-driven, we will want to use the main loop to manipulate the timer list, so a periodic task will need to check with the timer list to find any timers that have expired and execute their callbacks. Otherwise, we would have to cross the boundary between interrupts and the main loop. For example, when a timer interrupt occurs, we have two choices. If a timer is ready to fire, we could immediately fire its callback from the interrupt handler, or we could signal the main loop which would then handle it the next time it checked all the timers. In most cases, the second option is probably the better option. Otherwise, you would have to make sure all of your callbacks were safe to call from interrupts, and you’d probably also have to worry about modifying the linked list of timers from the interrupt (this tends to overly complicate the functions that add to and remove from the linked list). Plus, the interrupt handler would run for a long time, and shorter interrupt handlers are usually better.

My point is that we will need a function that will periodically be called by the main loop to check if any timers have expired and dispatch their callbacks.

With that in mind, here’s timer.h:

#include <stdint.h>

struct timer {

volatile uint32_t ticks_remaining;

void (*callback)(struct timer *, void *);

void *callback_data;

struct timer *next;

};

void timers_init(void);

void timers_check(void);

void timer_add(struct timer *t);

(Stick that code inside of the #ifdef and #endif that are automatically generated by LPCXpresso.)

The timer struct will be a struct that anybody registering with the timer will create. The calling code will fill the struct’s callback, callback_data, and ticks_remaining members, and pass the struct to timer_add(). The next member of the struct is used to keep a linked list. The main loop of the program will call timers_check() periodically to find out if any timers have expired. The callback member might look funny if you’re not familiar with function pointers. Basically, it just lets you give the address of a function to the timer system. The function will have the prototype “void blah(struct timer *t, void *data)” This is a commonly-used pattern for implementing callbacks. You tell the timer module what function to call. When the timer is ready, it will call the function for you. We’ll implement that code below so you can see it with your own eyes.

Notice that we made the ticks_remaining member volatile. This is because ticks_remaining will be updated by both the interrupt handler and the main loop, so it’s best to make it volatile to ensure all accesses to it always grab the latest contents from RAM. In this particular case, I don’t think that making it volatile will actually change the generated machine code at all, but it’s a good practice to get in the habit of doing, so we should make it volatile anyway.

Now, let’s implement the actual code that the timer uses. I’ll try to explain how this code works (it helps if you’re familiar with linked lists). Do the same type of thing you did to create the header file, but this time choose New->Source File.

timer.c

#include "timer.h"

#include <stdlib.h>

#include "LPC11xx.h"

static struct timer *active_timers_head = NULL;

static struct timer *active_timers_tail = NULL;

void timers_init(void)

{

// Turn on the system tick timer with an interval of once per millisecond.

SysTick_Config(SystemCoreClock / 1000);

}

// Call this function periodically from the main loop.

void timers_check(void)

{

struct timer *prev_t = NULL;

struct timer *t = active_timers_head;

while (t)

{

// Has this timer expired?

if (t->ticks_remaining == 0)

{

// Grab a pointer to the next item in the list.

struct timer *next_t = t->next;

// Remove t from the list.

// Two cases:

// 1) t is the first item in the list.

// 2) t is not the first item in the list.

if (t == active_timers_head)

{

// t is the first item in the list. Set the head

// of the timers list to SKIP t. As long as we do

// this operation first, we won't mess up the

// interrupt handler.

active_timers_head = next_t;

}

else

{

// t is NOT the first item in the list. Set the

// previous item in the list's "next" pointer to

// skip t, effectively removing it from the list.

// As long as we do this operation first, we won't

// mess up the interrupt handler.

prev_t->next = next_t;

}

// Update the tail pointer if the last item in the list

// was removed.

if (t == active_timers_tail)

{

active_timers_tail = prev_t;

}

// Do the callback for it now that it has been removed

// and the list is in a consistent state.

t->callback(t, t->callback_data);

// No need to update "prev_t" -- it hasn't changed.

// Move on to the next item in the list, using the saved

// value of "next" from earlier.

t = next_t;

}

else

{

// This timer hasn't expired yet, so just move on to

// the next item in the list.

prev_t = t;

t = t->next;

}

}

}

void timer_add(struct timer *t)

{

// Append the item to the end of the list, so its "next" is NULL.

t->next = NULL;

if (!active_timers_tail)

{

// First item being added to an empty list

active_timers_tail = t;

active_timers_head = t;

}

else

{

// Item is being appended to the end of a nonempty list.

active_timers_tail->next = t;

active_timers_tail = t;

}

}

void SysTick_Handler(void)

{

// Here is the interrupt handler that counts ticks.

struct timer *t = active_timers_head;

while (t)

{

// If we can, decrement the number of ticks remaining.

if (t->ticks_remaining > 0)

{

t->ticks_remaining--;

}

t = t->next;

}

}

Okay. Before you panic, that was a LOT of code. But don’t sweat it. I’m going to go into detail about each function now. First, let’s look at the static variables at the top of the file. We defined head and tail variables to keep track of a linked list of timers. Head will be the first timer, tail will be the last timer. They will be NULL when the list is empty. As far as I can tell, they do not need to be volatile because the interrupt handler doesn’t ever modify them.

SysTick_Handler

As for the functions, I’m going to start in reverse. Let’s look at the SysTick handler first so we can keep it in mind in the background as we look at the other functions. The SysTick handler will fire once every millisecond. If you’re familiar with linked lists, you will realize this is just a simple implementation of stepping through all items in a linked list. Each item’s “next” pointer points to the next item in the list, until the last item’s “next” pointer is NULL. It decrements the tick counter for each item in the list (but doesn’t decrement it if it’s already at 0–that would cause it to wrap around back to 0xFFFFFFFF, which would be very bad!). Pretty simple, right? Now keep in mind that at ANY point in the main loop of the program when interrupts are enabled, this function could fire. So we need to be careful about how we do things to make sure that the linked list is always in a consistent state while interrupts are enabled — otherwise the interrupt handler could step off the end of an inconsistent list and all kinds of weird behavior would happen. I’ve seen it firsthand in past projects when I made a mistake! I’ll explain what I mean when we look at the timer_add() function below.

Note that this function must be called exactly by the name “SysTick_Handler”. The linker knows that a function with that exact name is an interrupt handler for the SysTick interrupt. Also, as you may recall from my interrupt handlers article, the Cortex M-series are really cool in how they handle interrupts, so your interrupt handler only has to be a standard C function.

timer_add

Now, let’s talk about timer_add(). It’s a really simple function that adds a timer to the end of the linked list of timers. If you don’t follow the logic of what I’m doing in the function, read up on linked lists (in particular, this is a singly-linked list). There are two possibilities when the function is called — either the list is empty, or it’s not. If it’s empty, both the head and tail pointers will be NULL, so we need to set those variables to both point to the item we will add. If it’s nonempty, we just need to set the old tail’s “next” pointer to point to the newly-added item and then update the list’s tail pointer to point to the new end of the list.

Let’s think about this function from the perspective of interrupt safety. We know that at any moment, an interrupt might fire that would step through the complete linked list. In order to keep the list consistent, we must set the new item’s “next” pointer to NULL before adding it to the list. Otherwise, an interrupt could fire after adding it to the list, but before changing its “next” pointer to NULL. If the “next” pointer happened to be some random uninitialized value, the interrupt handler would treat it as a pointer to the next item in the list and continue stepping past the end of the list, eventually probably causing the program to crash when it tried to access an invalid address, or it might end up in an infinite loop if it never happened to access a bad address. Either way, the behavior would be bad news. There would only be a small window of opportunity for a problem to occur (the interrupt would have to fire at JUST the right moment), but when you’re writing interrupt-safe code, Murphy’s Law always applies. Expect the unexpected!

Because the interrupt doesn’t ever access the “tail” pointer, we don’t have to worry about the order in which the tail is modified. But as I explained above, we definitely have to ensure that the new item’s “next” pointer is NULL before putting it into the list. The alternative to careful ordering of operations would be to disable interrupts while modifying the list–but if we can get away with not having to disable interrupts (which we can in this case), that’s the better way to go from the perspective of lessening your program’s interrupt latency.

timers_check

Okay, this is the big function. You might want to open up another window next to this window so you can follow the code as I talk about it. I can’t really think of a great way to put the function inline with this text, so that might be the best plan of attack.

The basic idea of the function is simple, and it’s not too different from the interrupt handler. Loop through every item in the list, and find any timers that have a tick counter of 0 (meaning the timer has expired and it’s ready to fire). The trickiness comes from the fact that this function also removes such timers after calling their callback function.

Not only do we keep track of the current list item we’re at in the loop, but we also keep track of the previous list item. The reason we do this is to make deletions from the linked list easy. When you remove from a singly-linked list, you have to know what item was before the item you’re removing in the list. If we didn’t keep track of the previous item, we would have to write code to step all the way through the list again just to determine which item was before the item we’re removing. That would be a huge waste of processor time, so keeping an extra “previous” variable is a good way to fix that issue. For you CS algorithm geeks who like big O notation: normally, removing an arbitrary item from a singly-linked list is an O(N) operation. Because we’re already stepping through the list anyway to check all of the items, we’ve effectively turned the remove operation into an O(1) operation with the caveat that it’s performed inside a different O(N) operation.

This could also be fixed by making the list into a doubly-linked list where each item has both a “next” and a “previous” pointer (and thus a remove operation is always O(1)), but we didn’t really need that functionality in this program.

Anyway, if an item has expired, it is removed from the list by setting the previous item’s “next” pointer to point to the item after the item being removed from the list. Then, there’s special bookkeeping in case the head or tail pointer has to be updated.

The interrupt safety concern in this function is that the previous item’s “next” pointer (or the head pointer, if we’re removing the first item) has to be updated before ANYTHING else is done to the item being removed. That way, the list will be in a consistent state before and after that line of code. Before updating the previous item’s “next” pointer, the interrupt will step through the entire linked list and skip the expired item because it already has a tick count of 0. After updating the previous item’s “next” pointer to skip the item being removed (which is an atomic operation, so it’s safe to do with interrupts enabled), the interrupt will step through the entire linked list, but it’ll skip the expired item for a different reason: nothing has a “next” pointer that points to it anymore. Once nothing points to it, it’s definitely safe to fiddle with it as much as we want — there’s no way the interrupt will touch it. I hope those last couple of sentences weren’t too confusing — let them sink in until you follow completely, because it’s important.

Back to the rest of the function. Once an item has been removed from the list, we call the callback function on it, and then move on to the next item in the list, repeating the loop until we’ve looked at every item. The callback function might re-add the item to the list (mine does, as you’ll see later). Note: there are certain operations a callback handler could do to the linked list (mainly, removing other items from the list) that could cause problems because the loop’s pointer variables might end up pointing to an item that is no longer in the list. So in this implementation, please don’t remove items from the list inside a callback. A better solution might be to create a linked list of expired timers in the loop, and then go through another loop after the first one, calling each expired timer’s callback. I’m rambling now, but I just wanted to explain that this implementation is not 100% perfect, but it could be fixed. I didn’t want to over-complicate the sample code, but it wouldn’t be right to hold that bit of information back from you, so there you have it.

timers_init

If you’ve made it this far, congratulations. You understand the most difficult part of this whole article. This is the last function belonging to the timer module, and it’s really easy to follow. All it does is enable the SysTick timer and its interrupt.

The SysTick_Config() function is provided by CMSIS, so you can call it on any processor that works with CMSIS. You can also see its source code (it’s in core_cm0.h in the CMSIS project). You provide it with the number of ticks of the SysTick timer that should happen between its firings. In this case, I called it with the parameter “SystemCoreClock / 1000”. SystemCoreClock is a variable provided by CMSIS that tells you the current clock rate of the processor in Hz (in our case, it will be 48,000,000, meaning 48 MHz — this is the default that the NXP-provided CMSIS libraries configure it for, although you can change it). It turns out that the SysTick timer also operates at the same clock rate (well, it can be configured for that clock rate, and that’s how the CMSIS library configures it).

By passing the value 48,000,000 / 1000 = 48,000 to SysTick_Config(), we are telling the timer to fire every 48,000 ticks of the SysTick timer. Since there are 48,000,000 ticks per second, 48,000 ticks comes out to 1/1000th of a second, or a millisecond. That’s how I figured out the value to pass to it.

If you’re in LPCXpresso and you hold down the control key, the SysTick_Config() function should turn into a link as you hover over it. Click with the control-key still held down and it should jump to the source code of SysTick_Config() in core_cm0.h — a nice little trick that I use all the time. You can see that the function sets the LOAD register of the SysTick peripheral, which configures how often the counter will reset. This is exactly the same feature I described in my article about timers when I said that some timers support the ability to reset their counters back to zero after a match. We end up with a repeating interrupt without having to do any cleanup work each time the interrupt fires. (To be exact, I believe the SysTick counter actually starts at the LOAD value and counts down to 0, rather than counting up to LOAD, but the idea is exactly the same–just in reverse.) Other than that, the rest of the function enables the timer and its interrupt. That’s really all there is to it.

main.c

I mentioned main.c earlier, but we didn’t put any code into it. Now, it’s time to get that done. Open up main.c. You should see some auto-generated CRP stuff at the top of the file (that’s for code read protection; leave it in place as is) and a pretty barebones main() function that has nothing but a loop. Here’s our new main.c after adding a timer callback function and modifying main():

#include "timer.h"

#include "LPC11xx.h"

#define LED_PORT LPC_GPIO0

#define LED_PIN 7

static uint32_t cur_delay = 1000;

static uint32_t direction = 0;

void timer_expired(struct timer *t, void *data)

{

(void)data; // eliminates an unused variable compiler warning

// Figure out the new blink delay

if (direction == 0)

{

if (cur_delay > 50) cur_delay -= 25;

else direction = 1;

}

else

{

if (cur_delay < 1000) cur_delay += 25;

else direction = 0;

}

// Reschedule the timer

t->ticks_remaining = cur_delay;

timer_add(t);

// Toggle the LED

LED_PORT->DATA ^= (1 << LED_PIN);

}

int main(void)

{

// No interrupts while we're initializing

__disable_irq();

// Set LED as output

LED_PORT->DIR |= (1 << LED_PIN);

LED_PORT->DATA |= (1 << LED_PIN);

// Set up the timer system and add our timer to it

static struct timer t;

t.callback = timer_expired;

t.callback_data = 0; // unused

t.ticks_remaining = cur_delay;

timers_init();

timer_add(&t);

__enable_irq();

while (1)

{

// Simple main loop. Just check for any timers that have expired...

timers_check();

// And wait for the next interrupt to save power.

__WFI();

}

}

The first function, timer_expired, is our timer callback function. It changes the delay until the next time it’s called, and reschedules itself. Then, it toggles the LED. This will have the effect of making the LED blink faster and faster, and then when the delay finally reaches 50 milliseconds, it’ll start blinking slower and slower until the delay gets back up to 1000 milliseconds, and then the cycle repeats. Recall that XORing a bit will toggle it. Also, remember when we said the LED was connected to GPIO port 0, pin 7? That’s why we’re referencing LPC_GPIO0, and that’s why LED_PIN is defined as 7.

main() is really simple. __disable_irq() and __enable_irq() are macros (well, actually, static inline functions that end up essentially being macros) that resolve to assembly instructions for disabling and enabling all interrupts. The reason we disable interrupts is to ensure we can initialize everything safely before interrupts start bombarding us. The meat of the main() function is simple. Initialize the timers, add our timer to the list (see how we set timer_expired() as the callback?), and then go into an infinite loop calling timers_check(). __WFI() is another static inline function that resolves to an assembly instruction that tells the microcontroller to wait until another interrupt occurs. It’s not essential, but it probably saves some power (and thus, heat).

Compiling and flashing

Congratulations! You’ve made it through the entire program! To compile it, right-click on the project and choose Build Project. Hopefully, you won’t get any errors (the Console and Problems tabs at the bottom of the window are useful for discovering what’s going on). Assuming it compiles correctly, it’s time to try flashing it.

Plug in the LPCXpresso board, and some drivers may install. Click the Debug ‘LPCXpressoTest’ button in the Quickstart tab in the bottom left corner of the LPCXpresso window. You should eventually end up with a screen showing you paused at the beginning of main() and waiting for you to go. At the top right corner of the window, you should see a button with a green arrow. This is your Resume button. Nearby, there are also buttons such as Step Over and Step Into, just like you would normally see with a debugger. Hover over them and read the tooltips to see what they do. Click the Resume button and your program should happily run. You should see the blink rate slowly speeding up until it gets really fast and starts slowing down again.

When you’re done, click the red square (Terminate) button to exit debugging. That’s it!

Future improvements

This program is all right, but I didn’t put as much time into it as I might have liked. Here are some challenges that I thought of in case you’re bored and feel like working on some programming:

- Keep the timer list ordered by expiration time, so you only have to check the beginning of the list until you’ve found a timer that hasn’t expired yet. This would increase the time it takes to add an item to the timer list — it would no longer be an O(1) operation — but it would decrease the time taken by the periodic “check” function, which is probably a better optimization.

- Keep a single global “ticks” variable that’s incremented by the timer interrupt handler. When a timer is added to the list, keep track of what the tick value was at that point (in a member of the struct). Then, just check the difference between the current tick value and the start value until enough time has elapsed. All of that calculation can be done in the main loop, so the interrupt handler does nothing except increment the ticks variable. That’s probably a better way to implement this timer system. I made the code a bit more complicated in this tutorial in order to demonstrate how much fun interrupt safety can be. If anyone is interested (and nobody feels like doing it themselves), I can show a concrete example of what I’m talking about. One thing to keep in mind is that the ticks variable will eventually wrap around from 0xFFFFFFFF to 0. If you use subtraction (now_ticks – begin_ticks) to determine the amount of time that has elapsed, the calculation should still come out fine despite the wrapping.

- Remove all expired timers from the timer list before calling any callbacks, as I described in my rambling about the timers_check() function. If in the future we added a timer_remove() function that allowed a timer to be removed from the list before it expired, and a callback called that function, the check() function could end up out of sync with the list because the “prev_t” or “next_t” variable might no longer be correct. By removing all expired timers and getting out of that loop before calling any callbacks, the callbacks would be free to do whatever they wanted to the active timers list.

Conclusion

For those of you here because of the review, the LPCXpresso OM11049 board is pretty cool. I love the Cortex-M0 microcontroller on it. The really nice thing about this particular board is they don’t hook anything up to any pins (except for the single LED). If you want to design your own complete circuit, you can do it and not have to worry about pins being used by other devices on the board. That’s really flexible if you’re in the mood for wiring something up on a breadboard. If you solder some 0.1″ pitch headers to the board, it should plug directly into a breadboard. If you’re not into soldering, you can still at least play around with the LED or buy one of the baseboards. Seriously though, I’d recommend soldering the headers onto the board if at all possible. I think you could do some really cool stuff on a breadboard with it. You can buy it at Newark or Farnell.

For those of you here because of the microcontroller tutorial, I hope you’ve had fun with this. I wanted to move away from a bunch of theoretical stuff this time and show some actual microcontroller peripherals in action. I realize that the CMSIS code kind of shielded you from the inner workings of the timer, but I’m hoping that looking at the code of SysTick_Config() helped a little bit. If you take anything from this tutorial, I would say the most important part was the interrupt safety examples and my explanations for why I did things in the order I did them to preserve the interrupt safety of the code. If you understand those concepts, you’re well on your way to practicing safe, interrupt-protected embedded coding!