Way back in part 2 of this series, I first got my Chumby 8 booting into a newer Linux kernel. (Here are links to parts 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, and 11 if you want to read the rest of the saga). At that point early on in the project, I had to get the UART driver working. I didn’t spend much time talking about the UART in that post, but it actually gave me a small challenge that I recently had to revisit. I thought it would be fun to tell the full story of the UART struggles I ran into.

When I was first figuring out how to configure my new kernel, I noticed that the pxa168.dtsi file included in the mainline kernel already had the UARTs added. They each had two compatible strings, in order of priority: mrvl,mmp-uart and intel,xscale-uart. This makes sense because Intel’s XScale architecture was used for many things including the PXA series. Then the PXA series was sold to Marvell in 2006, who later designed the PXA16x processor used in the Chumby 8.

Searching through the kernel sources, there were actually three drivers that reference the mrvl,mmp-uart compatible string: 8250_pxa, 8250_of, and the deprecated pxa driver. I originally tried out 8250_pxa, but I ran into a problem with it. During large transmits, for example when typing “dmesg” after the kernel booted, it would drop a ton of characters. Here’s a small excerpt of a modern recreation of the issue in a newer kernel:

Welcome to Buildroot

buildroot login: root

# dmesg

[ 0.000000] Booting Linux on physical CPU 0x0

[ 0.000000] Linux version 6rm-linux-gcc.br_real (Buildroot ) 10.3.0, GNU ld (GNU Binutils) :30:31 PDT 2024

model: Chumby Industries Chumby: ignoring loglevel setting. instruction cache

[ cache writeback

[ 0.000000]x06ffffff (16384 KiB) nomap non- 0.000000] cma: Reserved 8 MiB0.000000] Zone ranges:

[ 0.0000000000-0x0000000007ffffff]

[ for each node

[ 0.000000] E000000] node 0: [mem 0x00000

[ 0.000000] node 0: [memffffff]

[ 0.000000] node 00000007ffffff]

[ 0.000000] 000000000000-0x0000000007ffffff]r0 d32768 u32768 alloc=1*32768

[ 0.000000] Kernel commandgnore_loglevel rootwait usb-stor/mmcblk0p2

[ 0.000000] Dentr4 (order: 4, 65536 bytes, linearsh table entries: 8192 (order: 300000] Built 1 zonelists, mobili512

[ 0.000000] mem auto-iniap free:off

[ 0.000000] Memo68K kernel code, 213K rwdata, 15, 28216K reserved, 8192K cma-reslign=32, Order=0-3, MinObjects=0] rcu: Preemptible

hierarchical 00] rcu: RCU calculated value of0 jiffies.

[ 0.000000] NR_IRd irqs: 16

[ 0.000000] rcu: izes based on contention.

[ os2-cache, disable it

[ 0.00etch.

[ 0.000000] Tauros2: D 0.000000] Tauros2: Enabling L2 L2 cache support initialised in d_clock: 32 bits at 3250kHz, res4198758ns

[ 0.000054] clocksffff max_cycles: 0xffffffff, max0.000303] Console: colour dummy ibrating delay loop... 795.44 Bo247] CPU: Testing

write buffer c_max: default: 32768 minimum: 30sh table entries: 1024 (order: 00644] Mountpoint-cache hash tablbytes, linear)

[ 0.044475] Sr 0x100000 - 0x100058

[ 0.04lementation.

Rather than look deeper into this back in 2022, I just tried a different driver instead. 8250_of is basically just another frontend for the same underlying 8250 driver, so I assumed it would have the same problem and tried the deprecated pxa driver (CONFIG_SERIAL_PXA) instead. This older driver worked completely fine out of the box. I ignored a big warning about it in Kconfig: Unless you have a specific need, you should use SERIAL_8250_PXA instead of this. I really didn’t feel like jumping into a UART driver rabbit hole that early in the project, especially knowing that I had a working solution. So I just forgot about it. Don’t worry if you think I’m ignoring this problem after describing it — we’ll revisit it later in this post.

Fast forward to the release of the Linux 6.9 kernel in May of 2024. I was still using the deprecated driver and it had been working great. Ever since I started on this whole project, I had been occasionally rebasing my remaining non-upstreamed patches on top of newer kernels when they came out. When Linux 6.9 arrived, I did my normal rebase process. I instantly noticed a problem: my console output would stop prematurely. At startup, the login prompt wouldn’t show up:

Initializing random number generator: OK

Saving random seed: SKIP (read-only file system detected)

Starting haveged: haveged: command socket is listening at fd 3

OK

Starting system message bus: done

Starting network: OK

Starting Xorg: [ 5.866029] libertas_sdio mmc1:0001:1 wlan0: Marvell WLAN 802.11 adapter

[ 5.888985] libertas_sdio mmc1:0001:1: Runtime PM usage count underflow!

OK

I could force the rest of the missing output to transmit if I typed a character on the console. For example, here’s the result of pressing enter:

Initializing random number generator: OK

Saving random seed: SKIP (read-only file system detected)

Starting haveged: haveged: command socket is listening at fd 3

OK

Starting system message bus: done

Starting network: OK

Starting Xorg: [ 5.866029] libertas_sdio mmc1:0001:1 wlan0: Marvell WLAN 802.11 adapter

[ 5.888985] libertas_sdio mmc1:0001:1: Runtime PM usage count underflow!

OK

Welcome to Buildroot

buildroot login:

Welcome to Buildroot

buildroot login:

See how I got two buildroot login prompts? This means the initial prompt wasn’t lost; it was just delayed. Luckily this gave me a pretty easy method for reproducing the issue, so I performed a Git bisect to find where the bug was introduced. First I manually narrowed it down to between v6.8 and v6.9-rc1 using some common sense, and then I bisected from that point on:

git checkout v6.9-rc1

git bisect start

git bisect bad

git checkout v6.8

git bisect good

After testing 13 intermediate commits (cherry-picking my custom patches on top each time), I received an answer from Git. The bisect process narrowed the problem down to commit 7bfb915a597a301abb892f620fe5c283a9fdbd77, which was intended to fix an issue involving the Broadcom BCM63xx UART driver. The funny part about this situation is that the whole point of this commit was to fix a similar problem in the BCM63xx driver. That driver was dropping characters at the end of transmits. It was completely dropping them though, not just delaying them like I was seeing.

diff --git a/include/linux/serial_core.h b/include/linux/serial_core.h

index 55b1f3ba48ac..bb0f2d4ac62f 100644

--- a/include/linux/serial_core.h

+++ b/include/linux/serial_core.h

@@ -786,7 +786,8 @@ enum UART_TX_FLAGS {

if (pending < WAKEUP_CHARS) { \

uart_write_wakeup(__port); \

\

- if (!((flags) & UART_TX_NOSTOP) && pending == 0) \

+ if (!((flags) & UART_TX_NOSTOP) && pending == 0 && \

+ __port->ops->tx_empty(__port)) \

__port->ops->stop_tx(__port); \

} \

\

The change made by the commit, as well as the commit message’s explanation, made complete sense at first glance, so I thought maybe it was actually a bug in the deprecated pxa driver that the change exposed. There are a few more notes about the change in the original patch submission on the mailing list. The gist of it is: it prevents the driver’s stop_tx() callback from being called if tx_empty() is returning false — the idea being that the UART shouldn’t be stopped if it hasn’t finished transmitting all its queued data yet. This kind of puzzled me because you would think this type of change would only improve the TX situation, not make it worse as I observed.

I spent some time digging into the problem. At first I thought it would be difficult to debug the UART since it was also acting as my console, but luckily, I had Wi-Fi fully working at this point. So I could SSH into the device to access printk output without depending on it actually going out through the UART. This would have been much trickier to debug earlier in the project, so I was very happy I had everything else fully working when this problem popped up!

With the UART output clearly hung up, I could type:

echo hi > /dev/ttyS0

in an SSH session and nothing would show up in my minicom window, confirming that trying to send more data wouldn’t fix it. But then as soon as I typed a character in minicom — in other words, caused a byte to be received by the UART — all of the delayed data would finally be transmitted, including the “hi”.

As you may have guessed by the fact that it’s supported by the 8250 driver, the PXA16x’s UART is pretty much just a standard 16550A UART. There are a few differences — notably, the RX and TX FIFOs are 64 bytes, and there’s a special “UART unit enable” bit in the interrupt enable register that has to be turned on before it will operate at all. Fortunately, I have some experience dealing with this type of UART so I had some ideas about where to look.

I got the UART back in a frozen state and then dumped a few registers:

# devmem 0xd4017014 32

0x00000060

# devmem 0xd4017004 32

0x00000057

# devmem 0xd4017008 32

0x000000C1

Let’s go through these one-by-one. The first register at offset 0x14 is the line status register (LSR):

- Bit 7 = 0 –> no receive FIFO errors

- Bit 6 = 1 –> transmitter is empty

- Bit 5 = 1 –> transmit FIFO has half or less than half data

- Bit 4 = 0 –> no break signal has been received

- Bit 3 = 0 –> no framing error

- Bit 2 = 0 –> no parity error

- Bit 1 = 0 –> no overrun error (no data has been lost)

- Bit 0 = 0 –> no data has been received

Interesting. The line status register was telling me that the UART was completely idle. There was no reason for data to not be transmitted. Let’s look at the interrupt enable register (IER, offset 0x04) next:

- Bit 8 = 0 –> high speed mode disabled

- Bit 7 = 0 –> DMA disabled

- Bit 6 = 1 –> unit is enabled

- Bit 5 = 0 –> NRZ coding is disabled

- Bit 4 = 1 –> receiver data timeout interrupt is enabled

- Bit 3 = 0 –> modem status interrupt is disabled

- Bit 2 = 1 –> receiver line status interrupt is enabled

- Bit 1 = 1 –> transmit FIFO data request interrupt is enabled

- Bit 0 = 1 –> receiver data available interrupt is enabled

This confirmed that the pxa driver definitely wanted to be alerted when there was room to put more data into the FIFO. Everything looked normal so far. Next, I decoded the actual interrupt status in the IIR register at offset 0x08:

- Bits 7:6 = 3 –> FIFO mode is selected

- Bit 5 = 0 –> no DMA end of descriptor chain interrupt active

- Bit 4 = 0 –> no auto-baud lock

- Bit 3 = 0 –> no timeout interrupt is pending

- Bit 2:1 = 0 –> ignored, because bit 0 = 1

- Bit 0 = 1 –> no interrupt is pending

I kind of expected a TX interrupt to be pending because the FIFO was empty, but there was clearly no interrupt active according to the register. That totally makes sense, though. If an interrupt was being marked as active, I wouldn’t be seeing this problem to begin with!

I noticed that if I manually disabled and re-enabled the TX interrupt by toggling bit 1 of IER off and back on, that fixed the issue and transmitted any pending data:

devmem 0xd4017004 32 0x55 ; devmem 0xd4017004 32 0x57

Okay, that all checked out. I could manually re-trigger the interrupt, but I still wanted to understand why it wasn’t firing to begin with. Or was it? I added some printk statements to various points in the pxa driver and retested. I temporarily changed my bootargs so that the kernel wouldn’t use the UART as a console, because that would completely interfere with my testing.

The problem quickly stuck out to me as I dug through my debug output. First, I need to explain the call flow in the pxa driver here. Whenever a UART interrupt occurs, it checks the line status register to see if there is room in the TX FIFO to transmit a set of data. Here’s the relevant part of the IRQ handler:

static inline irqreturn_t serial_pxa_irq(int irq, void *dev_id)

{

...

lsr = serial_in(up, UART_LSR);

...

if (lsr & UART_LSR_THRE)

transmit_chars(up);

...

}

If there is room, it calls transmit_chars():

static void transmit_chars(struct uart_pxa_port *up)

{

u8 ch;

uart_port_tx_limited(&up->port, ch, up->port.fifosize / 2,

true,

serial_out(up, UART_TX, ch),

({}));

}

This is calling a helper macro uart_port_tx_limited(), which itself ends up calling a more generic macro __uart_port_tx(). __uart_port_tx() happens to be the macro that was slightly changed by the commit that broke this driver. It’s pretty long, so I’m not going to repeat it here, but as a quick summary it writes as many characters as it is allowed to write into the UART’s FIFO (half of the FIFO size in this case) until it has either reached the limit or has run out of characters to send. Then it potentially calls the stop_tx() callback function of the UART. The stop_tx() function in the pxa driver disables the TX interrupt.

Back to the actual problem I was seeing. The pxa driver was getting a TX interrupt! It wasn’t being lost. The problem was, it was getting a TX interrupt when there was nothing left to transmit, but there were still some characters left in the FIFO remaining to be sent out. In other words, the pxa driver’s tx_empty() callback was returning false. So stop_tx() wasn’t being called. This sounds like it wouldn’t hurt anything, but the problem is with a 16550A-style UART, if you get a TX interrupt, you need to either write more characters into the FIFO or disable the interrupt. Otherwise, leaving the TX interrupt enabled will lock the UART up because a new TX interrupt will never be signaled and you will be stuck waiting forever. That’s what was happening here. Prior to Linux 6.9 this was fine and the TX interrupt would always be disabled after it was determined that there was nothing left to send. The change added in Linux 6.9 to also check tx_empty() created a situation where the driver could potentially do nothing at all in response to a TX interrupt. You just can’t do that with this type of UART.

I wasn’t sure exactly how to fix this, given that the original patch was actually fixing a similar issue in the BCM63xx driver. If I reverted it, it would immediately break the BCM63xx. Also, was the real problem caused by that commit, or was it a pre-existing issue that the commit exposed? My first attempt was to fix it directly in the pxa driver by ensuring that stop_tx() would be called if the buffer was empty. That patch was (nicely) rejected by Jiri Slaby, one of the maintainers of the TTY/serial layer, with the reason being that uart_port_tx_limited() should be stopping the TX. Jiri was thinking that if I was seeing this problem, other drivers using uart_port_tx_limited() and similar helpers would also be affected.

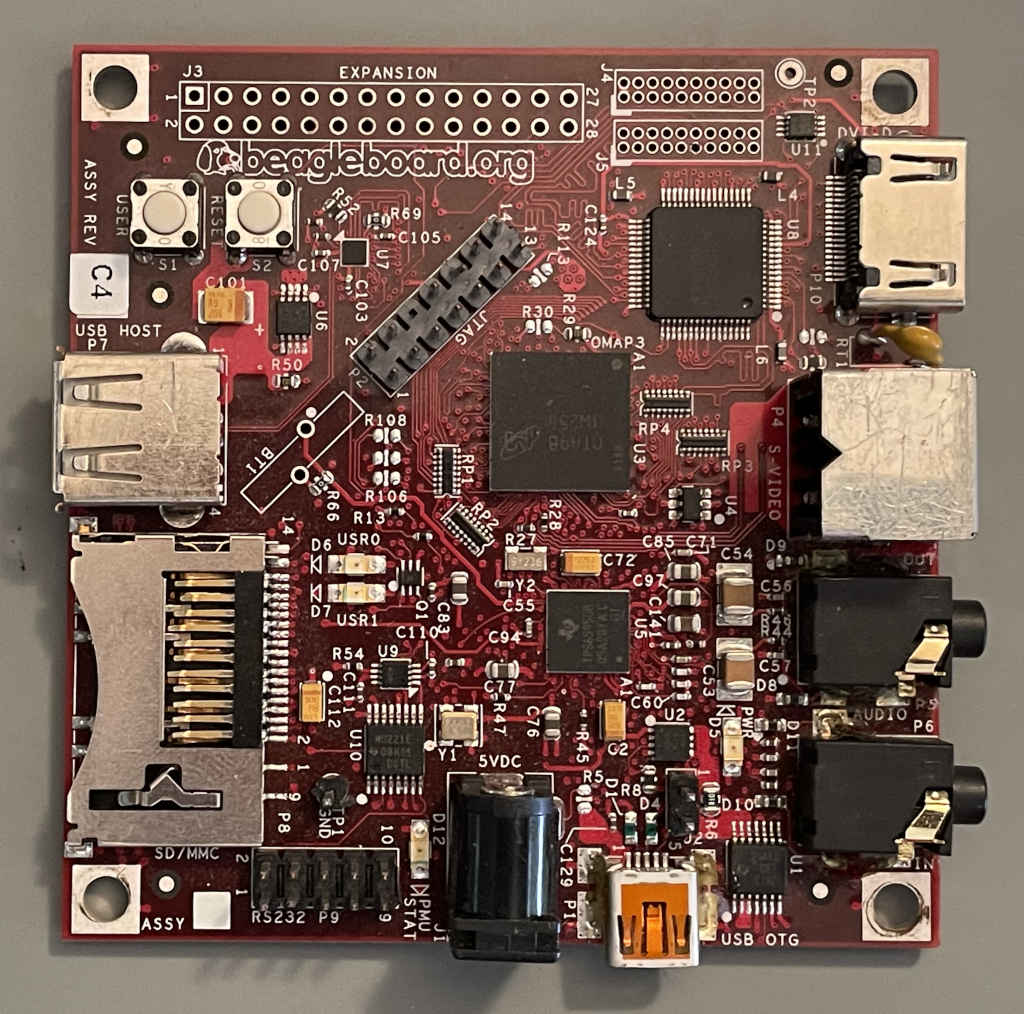

This led me back to the drawing board to think more about it. I decided it would be useful to try to validate Jiri’s thinking that other drivers should also be broken. One driver in particular stood out to me: omap-serial. I’m pretty familiar with TI’s OMAP/Sitara processors that would use this driver. It’s another 16550A-esque UART that has multiple drivers available in Linux, but unlike the old pxa driver, omap-serial isn’t deprecated. I took a detour from the Chumby and figured out how to confirm whether the OMAP serial driver was also broken. Long story short, I found an old Linaro fork of QEMU that implements OMAP3530 BeagleBoard emulation, and with enough finagling I got it to compile and run a kernel and boot into a basic buildroot rootfs. Convincing it to boot from a simulated SD card was way more work than I was counting on, and required a hacky patch to QEMU, but I figured it out.

The QEMU-emulated BeagleBoard using the omap-serial UART driver had the exact same problem! To be more accurate, it was even worse. Once the UART TX was hung up, it stayed hung up forever. I couldn’t un-stick it by causing it to receive a character like I could with the pxa driver. The reason a received character would kickstart the pxa driver’s frozen TX is because it has an errata workaround for a hardware bug. As we saw above, whenever it gets any kind of interrupt, it checks to see if it should transmit, regardless of whether the interrupt that fired is actually a TX interrupt. The omap-serial driver doesn’t have this same workaround, so TX was stuck forever.

This made me feel better, but I still wasn’t 100% convinced. So I went down memory lane and bought an old BeagleBoard on eBay in order to be totally sure the driver was broken on actual hardware too.

And yes, it behaved exactly the same way on hardware. Did I really need to buy more random old hardware just to test this out? Probably not…but now I have a BeagleBoard! I might talk about some fun BeagleBoard stuff in a future post. It’s a nice little addition to my repertoire. It fits perfectly into my little niche of doing modern stuff on old hardware.

I discussed this situation with Jonas Gorski, the author of the patch, to decide on a new approach that would fix those drivers while also maintaining compatibility with the bcm63xx_uart driver. What it amounted to was reverting the commit and then reintroducing his earlier V1 patch attempt that modified the bcm63xx driver to use UART_TX_NOSTOP instead of generically modifying the __uart_port_tx() macro. This is also what Jiri suggested initially.

The end result of this is: as soon as this fix is out in the wild, all of the drivers involved — pxa, omap-serial, and bcm63xx_uart — should behave correctly again. There are still some bigger questions though. As Jonas pointed out, the documentation for stop_tx() says it should stop transmitting characters as soon as possible. Does ASAP mean you should allow everything in the FIFO to finish transferring first, or is it supposed to stop transmissions immediately? Different drivers implement it differently. This inconsistency means other drivers could have the same issue observed in the BCM63xx driver. I think at this point the discussion gets a bit beyond the scope of my project. It’s interesting to see though. With so many different hardware implementations out there, it must be a nightmare to try to make changes to any of the core UART code without breaking something.

This was a very fun and unique part of my Chumby kernel upgrade project. Most of the stuff I’ve been working on isn’t being tested by others since the PXA16x is so old, and my changes have only touched the PXAxxx hardware or a single old Wi-Fi chipset. This was the first time I interacted with other current developers to figure out an issue like this that affected multiple drivers. It was definitely a positive experience!

The patch series was recently released as part of Linux 6.10-rc6, so we should see this fix included in the final 6.10 release. It will also be backported to a future stable 6.9 and 6.6 version too — the original breaking patch was also backported to 6.6, so it needs to be fixed there too. I CC’d the stable team when I submitted it, and that backport process just began.

Now that this problem is solved, let’s go back to the original problem with the 8250_pxa driver that I mentioned at the start of this post. Why was it garbling the UART output during large transfers? I decided that if I was going to put so much effort into fixing a bug in the deprecated pxa driver, it would be crazy if I didn’t figure out the problem with the recommended 8250_pxa driver too.

It ended up being a very simple problem. I added some quick printk statements and found that the transmit function serial8250_tx_chars() was putting 64 bytes into the FIFO at a time. This seems correct at first — after all, the TX FIFO is 64 bytes in size. However, the driver sets up the FIFO control register to fire a TX interrupt when the FIFO is half empty (bit 3 [TIL] = 0). This means it’s only safe to write 32 bytes into it when an interrupt occurs. Nothing was telling the common 8250 driver code about that limitation. So every time a TX interrupt occurred, it would load 64 bytes into the TX FIFO even though there was only guaranteed to be room for 32. Of course this is going to cause a bunch of characters to be dropped!

This was an easy fix to submit upstream as a patch. I double-checked a few other PXA2xx/3xx programmer’s reference manuals just to be sure this wasn’t a PXA16x-specific thing. They all had identical UART specs, at least in terms of the FIFO size and TX interrupt trigger level, so I felt confident pushing this out across the board. It did give me a curveball when it failed to apply cleanly to most of the stable kernels (here’s the message I received about 6.6, for example) due to small changes made to that section of the code within the past few kernel versions. But luckily, Greg KH provided some neat example commands in the failure messages that helped me fix the cherry-pick conflict on my own and resubmit fixed patches (here’s 6.6, for example). That was kind of a fun process to go through!

I’m glad I went through and fixed these cherry-pick conflicts for the older stable kernels, because it led me to realize that the bug was only around after Linux 5.10 or so. When I got around to backporting the patch to Linux 5.4, I realized the 8250_pxa driver in that version already worked perfectly fine without my patch. It’s because sometime between 5.4 and 5.10, the driver was modified to stop autodetecting the port type, which was deemed unnecessary because we already knew it was an XScale port. When the driver stopped autodetecting the type, it actually stopped being aware that the TX IRQ threshold was 32 bytes. The autodetection had been taking care of that. So my “Fixes:” tag blaming the initial creation of the driver was slightly wrong. It really should have been the commit that stopped autodetecting the port type. Oh well. I don’t think it matters too much in the grand scheme of things. Anyway, this fix for the 8250_pxa driver was recently released as part of Linux 6.9.3, 6.6.35, and 6.1.95. It’s also slated for inclusion in Linux 6.10, having first been released in 6.10-rc4.

I kind of mentioned this earlier, but I’ll say it again. I’m not sure how easy it would have been to figure out this problem back in the summer of 2022 when I was first starting out with this project. I’m really really glad that the deprecated pxa driver was working fine back then. I think I would have gone mad trying to troubleshoot this FIFO threshold problem with the 8250_pxa driver at that point with nothing else working yet! The UART is one of the peripherals that is vital to early board bringup.

By the way, I later realized that I could have avoided this whole mess if I had just tried the 8250_of driver that I skipped way back at the beginning of this post. My original assumption about that driver being identical to 8250_pxa was incorrect — it works completely fine without any changes, because it actually runs the autodetection routine which correctly configures it so that only 32 bytes can be loaded into the TX FIFO at a time. If I had picked that driver right from the outset of this Chumby kernel project, I would have never run into any of these issues I discussed in this post today. I’m still glad I didn’t discover the 8250_of driver until the very end. It was very satisfying to fix all of these problems! Who knows how long it would have taken before someone else would have discovered that the omap-serial driver was broken in 6.9, for example? I didn’t find any other reports of problems.

Well, I think that’s about it. I don’t have any other interesting stories to tell about my Chumby 8 kernel upgrade process. I had discussed possibly getting it working with postmarketOS in past blog comments with a couple of readers, but that won’t be possible because the PXA16x is based on too old of an ARM architecture. I mean, I could build it all from scratch, but that kind of defeats the purpose when I already have buildroot working.

In the next (and final) post of this series, let’s look back at the project in summary. We’ll see which changes I was able to upstream and which changes are still sticking around in my custom branch. I’ll reflect on what I’ve learned in the past two years. It’ll be a fun way to close it out.

Thanks so much for this post! Very fascinating as always.

Maybe as a topic for a future post, you could talk about how 2013 you gained enough knowledge to start this Chumby process in 2022, and successfully carry it along.

Thank you Steve! I’m working on the final post now. I hope it ends up being entertaining!